companion intelligence

a.i. , togehter

At LupoTek, we develop advanced AI systems engineered to ensure that human capability evolves in tandem with technological progress. Our work is built on a foundational principle of co-intelligence, a model in which human judgment, intuition, and strategic reasoning remain central, while next-generation AI augments scale, precision, and analytical depth.

Recent global breakthroughs in artificial intelligence have demonstrated a clear trajectory: AI systems are becoming more capable across modalities, more aligned with real-world tasks, and increasingly relevant to strategic, scientific, and industrial domains. These developments confirm the importance of designing technologies that enhance human decision-making rather than supplant it. LupoTek’s frameworks reflect this direction, supporting environments where humans maintain primacy and technology extends their reach.

Our Companion-AI architectures are designed to be adaptable, dual-use, and context-aware, supporting a wide range of applied domains without prescribing specific outcomes. They enhance research velocity, deepen situational understanding, and reinforce strategic assessment, yet always operate with a human at the centre of the decision loop. This ensures that complex environments, civilian or critical, benefit from amplified clarity, without compromising human oversight or ethical boundaries.

towards synergy

-

LupoTek’s work on co-intelligent systems is based on the observation that human cognitive processes and machine-based computational architectures possess complementary strengths. Human operators demonstrate superior contextual reasoning, long-horizon inference, adaptive intuition, and the ability to integrate sparse or ambiguous information. Machine systems excel at high-frequency pattern detection, large-scale correlation analysis, and persistence over computational sequences that exceed human working memory or temporal bandwidth. The objective of co-intelligence is not to merge these domains superficially, but to engineer interfaces where both operate in parallel and exchange information at useful temporal and semantic resolutions.

Modern AI architectures (particularly large-context sequence models and multimodal inference systems) provide a practical foundation for this interaction. These models can internalize heterogeneous data streams, generate structured representations, and surface high-entropy indicators that humans then interpret or refine. In this configuration, AI systems act primarily as cognitive amplifiers: reducing noise, highlighting salient anomalies, running simulations, or proposing candidate hypotheses that extend human situational range. Crucially, interpretation, contextual weighting, and final evaluation remain human functions, as these rely on domain understanding, tacit knowledge, and experience-based heuristics that are not replicable in current machine systems.

Co-intelligence also addresses a practical constraint. Contemporary operational environments - scientific, infrastructural, analytical, or strategic - produce data at volumes and speeds that exceed unaided human throughput. Without computational assistance, opportunities for insight are often lost within noise. Co-intelligent architectures provide a structured method for extracting relevance at scale while maintaining human primacy over assessment and decision pathways. The result is a combined system with greater bandwidth, higher analytical stability, and more robust interpretive capacity than either humans or machines operating independently.

-

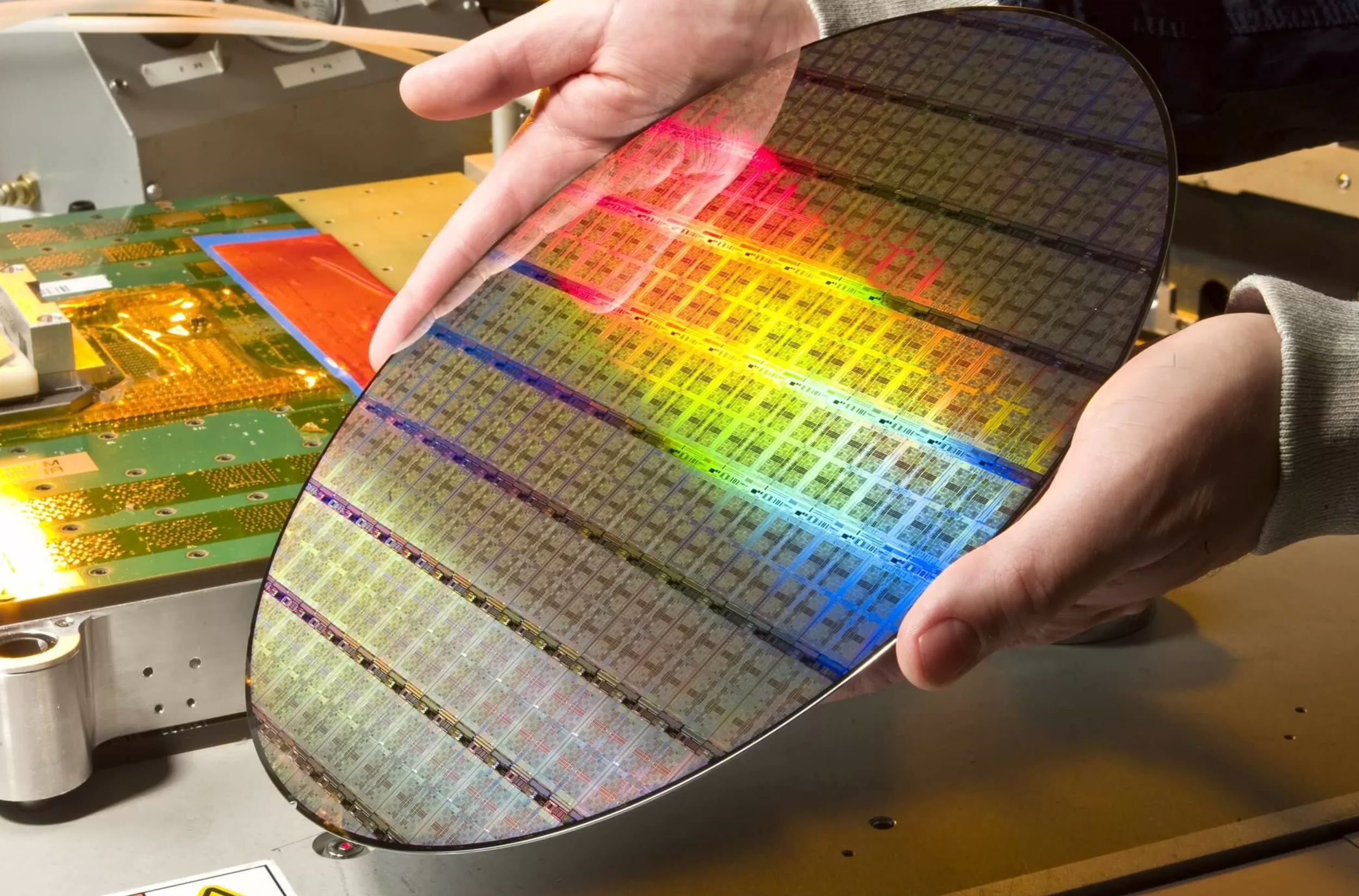

LupoTek’s computational research is shaped by practical advances in high-performance AI hardware and by emerging architectures that shift computation beyond traditional electronic limits. Contemporary GPU and NPU accelerators remain central, primarily for their ability to execute high-density tensor operations with deterministic timing and large bandwidth availability. Modern GPUs now integrate expanded SM clusters, larger L2 cache topologies, high-speed HBM stacks, and fine-grained warp scheduling that collectively support sustained mixed-precision throughput for transformer-scale workloads. NPUs extend this by incorporating systolic-array compute fabrics, low-bit quantisation engines, and sparsity-aware dispatch, enabling efficient execution of attention mechanisms and large-kernel convolutions with far lower energy per MAC than general-purpose processors.

Beyond electronic accelerators, LupoTek is actively investigating photonic computing as a future substrate for Companion-Intelligence systems. Photonic processors leverage light—rather than charge carriers—to perform computation, exploiting the fact that many core neural operations (particularly matrix multiplications) can be represented and solved through optical interference patterns. Integrated photonic circuits utilise waveguides, phase shifters, directional couplers, Mach–Zehnder interferometers, and microring resonators to construct reconfigurable optical meshes capable of performing linear operations in parallel, at propagation speeds defined by the refractive index of the medium rather than a system clock.

In analogue photonic matrix multiplication, input vectors are encoded into amplitude or phase states, propagated through an interferometric mesh implementing a unitary matrix, and decoded via photodetectors that convert interference outputs into electrical signals. Because these operations occur through passive optical propagation, multiply–accumulate operations effectively complete at “light-speed latency,” without capacitive delay, resistive loss, or instruction-level overhead. Wavelength-division multiplexing enables multiple independent computations within a single physical circuit, providing intrinsic spectral parallelism that is difficult to match in electronic systems.

Photonic architectures also exhibit favourable scaling behaviours for high-dimensional linear algebra. While electronic accelerators scale computation by increasing transistor density and clock coordination, photonic systems scale through spatial and spectral channel expansion—allowing many-dimensional matrices to be represented physically within the interferometric network. Thermal stability, phase noise, and fabrication tolerances remain engineering challenges, but experimental devices have already demonstrated femtojoule-per-operation efficiencies and matrix multiplications exceeding gigahertz-equivalent throughput.

LupoTek’s technical investigations focus on hybrid electro-photonic pipelines, where GPUs/NPUs handle nonlinear activation stages, training dynamics, and discrete logic, while photonic components accelerate dense linear layers, attention blocks, or large-scale embeddings. This division aligns with the mathematical structure of modern AI models, making photonics an attractive candidate for low-latency inference in future Companion-Intelligence systems.

Our research direction reflects a scientific reality rather than speculation: electronic accelerators continue to improve incrementally, while photonic computing offers a path toward orders-of-magnitude gains in speed and energy efficiency for mathematically structured workloads. Together, these developments inform LupoTek’s long-term architecture for next-generation co-intelligent systems.

-

Deploying advanced AI in complex or high-stakes environments requires systems engineered for interpretability, controllability, and predictable behaviour under varying load conditions. LupoTek’s architectures prioritize human-centered design not for philosophical reasons, but for technical ones: modern AI systems exhibit non-linear error characteristics, sensitivity to distributional shift, and emergent behaviours that necessitate human oversight for verification and course correction.

Operationally, our systems are configured to support functions such as multi-stream data fusion, anomaly detection across high-dimensional spaces, temporal pattern analysis, and generation of candidate scenario models. These outputs assist human operators by increasing information coherence, restructuring noisy data into interpretable formats, and identifying relationships that might otherwise remain latent. The systems do not attempt to generate autonomous directives. Instead, they act as analytical substrates on which human reasoning is applied.

In less constrained civilian environments, this architecture accelerates experimental feedback loops, improves planning fidelity, and increases the resolution at which systems can be monitored or optimized. In more sensitive contexts - where latency, precision, or operational discretion are central constraints - the same architecture provides stable situational augmentation without displacing operator judgment. This aligns with empirical findings across the AI field: systems with human-in-the-loop structures demonstrate greater resilience, more stable error profiles, and higher trustworthiness under domain drift. LupoTek’s emphasis on operator-centric integration is therefore grounded in observed system behaviour rather than policy frameworks.

-

LupoTek designs its systems to adapt to heterogeneous computational environments, variable data regimes, and evolving operational requirements. Modular architectures allow components to be reconfigured, scaled, or replaced without restructuring the entire system. This includes support for distributed inference nodes, mixed electronic–photonic computation pipelines, and context-dependent model routing where different subsystems handle different phases of an analytical workflow.

Scalability is achieved using elastic compute fabrics that adjust processing density based on load characteristics and operator intent. Lightweight deployments may run on local accelerators with bounded model sizes, while higher-throughput configurations utilise clustered GPU/NPU arrays or hybrid photonic-electronic nodes for dense linear algebra acceleration. Through this elasticity, the same underlying system can operate in minimal-resource environments or in large-scale computational domains with significantly higher input complexity.

Integration in sensitive environments requires strict control over data pathways, execution boundaries, and model behaviour. LupoTek employs techniques such as isolated memory domains, encrypted parameter stores, secure vector transport layers, deterministic execution modes, and compartmentalized inference graphs that reduce cross-surface exposure. The goal is to maintain computational performance while minimizing the propagation of sensitive signals or model artifacts across system boundaries. These mechanisms reflect practical requirements observed in high-assurance computing rather than theoretical constructs, ensuring that co-intelligent systems can be embedded in environments where confidentiality and stability are prerequisites.

-

The shift toward advanced human–machine teaming is not driven by speculative visions of artificial generality but by measurable trends in computational science: larger contextual windows, higher-fidelity multimodal representations, increasingly efficient accelerators, and the emergence of hybrid computational substrates such as photonic processors. These developments indicate that future systems will be capable of supporting more complex interpretive workloads at lower latency and with greater energy efficiency, enabling real-time augmentation in domains where such capability was previously impractical.

LupoTek’s research focus is on designing architectures that leverage these trajectories while retaining stable human interpretive control. This involves developing systems with predictable response characteristics, tunable transparency, traceable inferential pathways, and interfaces that map machine-generated structures into forms compatible with human reasoning. The aim is not automation of cognition but expansion of cognitive resolution - extending the range of variables a human can monitor, the number of hypotheses they can compare, and the temporal depth of scenarios they can evaluate.

As computational substrates evolve - particularly with the maturation of electro-photonic systems capable of ultra-low latency linear algebra - the scale and immediacy of machine-derived insight will increase. The challenge is to ensure that these insights remain interpretable and usable within human decision cycles. LupoTek’s work is oriented toward this integration problem: constructing systems where humans and machines operate as coupled analytical units, each compensating for the other’s limitations.

The objective is straightforward: to create technical ecosystems where human capability is amplified in a measurable, scientifically grounded manner, enabling operators to function with greater clarity, adaptability, and strategic precision as computational systems evolve.